Originally posted on the Google AI Blog

Significant advances in machine learning, speech recognition, and language technologies are rapidly transforming the way in which

recommender systems engage with users. As a result,

collaborative interactive recommenders (CIRs)—recommender systems that engage in a deliberate sequence of interactions with a user to best meet that user's needs—have emerged as a tangible goal for online services.

Despite this, the deployment of CIRs has been limited by challenges in developing algorithms and models that reflect the qualitative characteristics of sequential user interaction.

Reinforcement learning (RL) is the de facto standard ML approach for addressing sequential decision problems, and as such is a natural paradigm for modeling and optimizing sequential interaction in recommender systems. However, it remains under-investigated and under-utilized for use in CIRs in both research and practice. One major impediment is the lack of general-purpose simulation platforms for sequential recommender settings, whereas simulation has been one of the primary means for developing and evaluating RL algorithms in real-world applications like

robotics.

To address this, we have developed

RᴇᴄSɪᴍ (available

here), a configurable platform for authoring simulation environments to facilitate the study of RL algorithms in recommender systems (and CIRs in particular). RᴇᴄSɪᴍ allows both researchers and practitioners to test the limits of existing RL methods in synthetic recommender settings. RecSim’s aim is to support simulations that mirror specific aspects of user behavior found in real recommender systems and serve as a controlled environment for developing, evaluating and comparing recommender models and algorithms, especially RL systems designed for sequential user-system interaction.

As an open-source platform, RᴇᴄSɪᴍ: (i) facilitates research at the intersection of RL and recommender systems; (ii) encourages reproducibility and model-sharing; (iii) aids the recommender-systems practitioner, interested in applying RL to rapidly test and refine models and algorithms in simulation, before incurring the potential cost (e.g., time, user impact) of live experiments; and (iv) serves as a resource for academic-industry collaboration through the release of “realistic” stylized models of user behavior without revealing user data or sensitive industry strategies.

Reinforcement Learning and Recommendation Systems

One challenge in applying RL to recommenders is that most recommender research is developed and evaluated using static datasets that do not reflect the sequential, repeated interaction a recommender has with its users. Even those with temporal extent, such as

MovieLens 1M, do not (easily) support predictions about the long-term performance of novel recommender policies that differ significantly from those used to collect the data, as many of the factors that impact user choice are not recorded within the data. This makes the evaluation of even basic RL algorithms very difficult, especially when it comes to reasoning about the long-term consequences of some new recommendation policy—

research shows changes in policy can have long-term, cumulative impact on user behavior. The ability to model such user behaviors in a simulated environment, and devise and test new recommendation algorithms, including those using RL, can greatly accelerate the research and development cycle for such problems.

Overview of RᴇᴄSɪᴍ

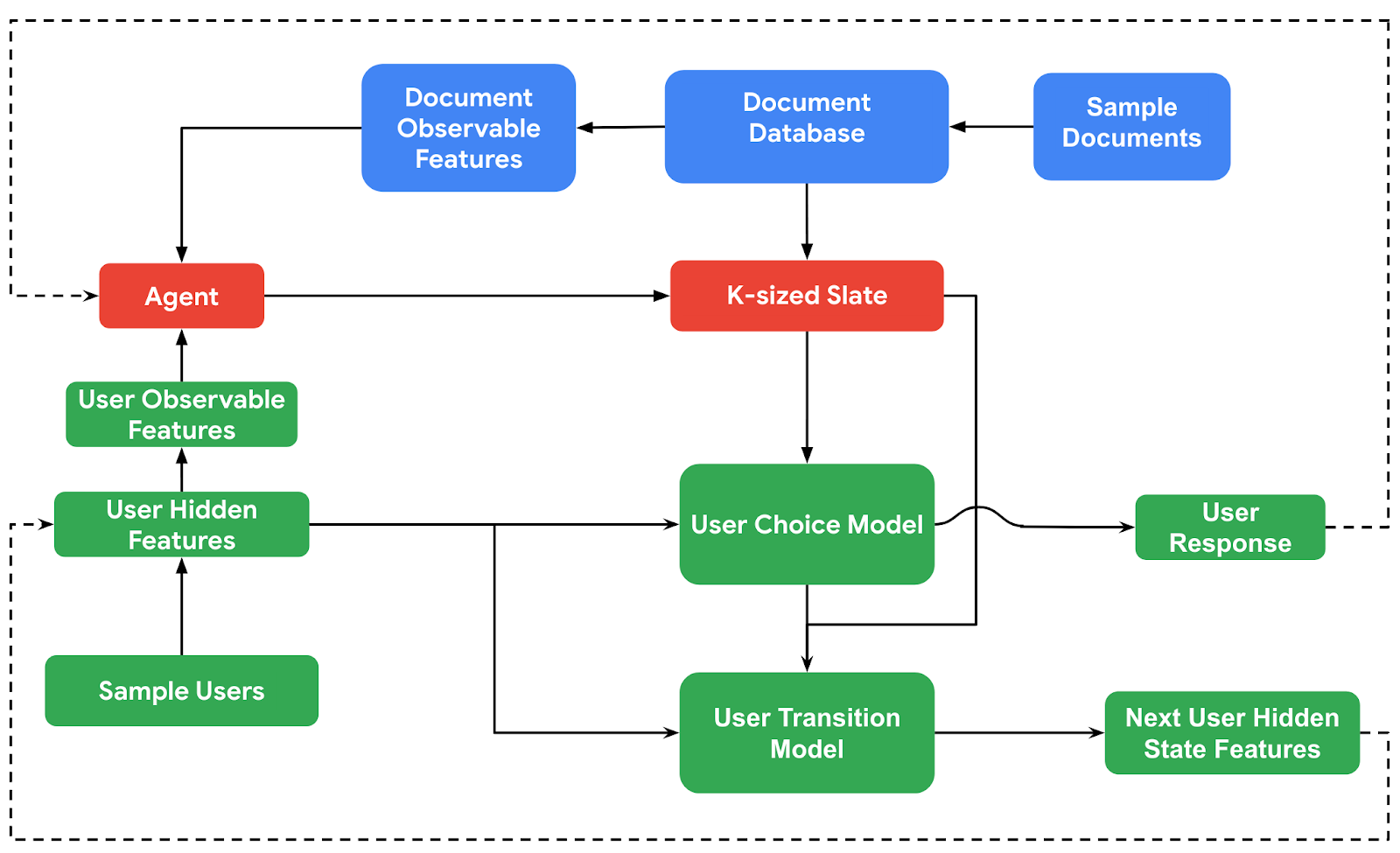

RᴇᴄSɪᴍ simulates a recommender agent’s interaction with an environment consisting of a

user model, a document model and a user choice model. The agent interacts with the environment by recommending sets or lists of documents (known as slates) to users, and has access to observable features of simulated individual users and documents to make recommendations. The user model samples users from a distribution over (configurable) user features (e.g., latent features, like interests or satisfaction; observable features, like user demographic; and behavioral features, such as visit frequency or time budget). The document model samples items from a prior distribution over document features, both latent (e.g., quality) and observable (e.g., length, popularity). This prior, as all other components of RᴇᴄSɪᴍ, can be specified by the simulation developer, possibly informed (or learned) from application data.

The level of observability for both user and document features is customizable. When the agent recommends documents to a user, the response is determined by a user-choice model, which can access observable document features and all user features. Other aspects of a user’s response (e.g., time spent engaging with the recommendation) can depend on latent document features, such as document topic or quality. Once a document is consumed, the user state undergoes a transition through a configurable user transition model, since user satisfaction or interests might change.

We note that RᴇᴄSɪᴍ provides the ability to easily author specific aspects of user behavior of interest to the researcher or practitioner, while ignoring others. This can provide the critical ability to focus on modeling and algorithmic techniques designed for novel phenomena of interest (as we illustrate in two applications below). This type of abstraction is often critical to scientific modeling. Consequently, high-fidelity simulation of all elements of user behavior is not an explicit goal of RᴇᴄSɪᴍ. That said, we expect that it may also serve as a platform that supports “sim-to-real” transfer in certain cases (see below).

|

| Data Flow through components of RᴇᴄSɪᴍ. Colors represent different model components — user and user-choice models (green), document model (blue), and the recommender agent (red) |

Applications

We have used RᴇᴄSɪᴍ to investigate several key research problems that arise in the use of RL in recommender systems. For example, slate recommendations can result in RL problems, since the parameter space for action grows exponentially with slate size, posing challenges for exploration, generalization and action optimization. We used RᴇᴄSɪᴍ to develop

a novel decomposition technique that exploits simple, widely applicable assumptions about user choice behavior to tractably compute

Q-values of entire recommendation slates. In particular, RᴇᴄSɪᴍ was used to test a number of experimental hypotheses, such as algorithm performance and robustness to different assumptions about user behavior.

Future Work

While RᴇᴄSɪᴍ provides ample opportunity for researchers and practitioners to probe and question assumptions made by RL/recommender algorithms in stylized environments, we are developing several important extensions. These include: (i) methodologies to fit stylized user models to usage logs to partially address the “sim-to-real” gap; (ii) the development of natural APIs using

TensorFlow’s probabilistic APIs to facilitate model specification and learning, as well as scaling up simulation and inference algorithms using accelerators and distributed execution; and (iii) the extension to full-factor, mixed-mode interaction models that will be the hallmark of modern CIRs—e.g., language-based dialogue,

preference elicitation, explanations, etc.

Our hope is that RᴇᴄSɪᴍ will serve as a valuable resource that bridges the gap between recommender systems and RL research — the use cases above are examples of how it can be used in this fashion. We also plan to pursue it as a platform to support academic-industry collaborations, through the sharing of stylized models of user behavior that, at suitable levels of abstraction, reflect a degree of realism that can drive useful model and algorithm development.

Further details of the RᴇᴄSɪᴍ framework can be found in the

white paper, while code and colabs/tutorials are available

here.

Acknowledgements

We thank our collaborators and early adopters of RᴇᴄSɪᴍ, including the other members of the RᴇᴄSɪᴍ team: Eugene Ie, Vihan Jain, Sanmit Narvekar, Jing Wang, Rui Wu and Craig Boutilier.

By Martin Mladenov, Research Scientist and Chih-wei Hsu, Software Engineer, Google Research